Non ci sono articoli nel tuo carrello.

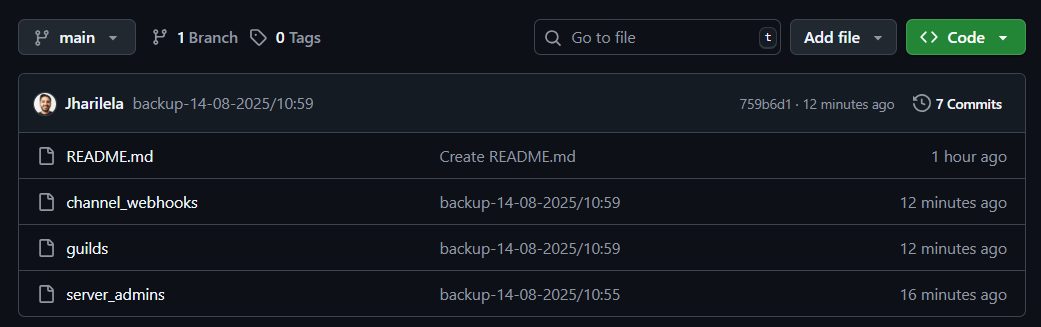

This workflow automatically backs up all public Postgres tables into a GitHub repository as CSV files every 24 hours.

It ensures your database snapshots are always up to date updating existing files if data changes, or creating new backups for new tables.

How it works:

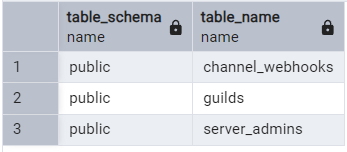

public schema.

Use case:

Perfect for developers, analysts, or data engineers who want daily automated backups of Postgres data without manual exports keeping both history and version control in GitHub.

Requirements: